Latent Painter

Turning diffuser predictions into painting actions*

*Refrain from using generated arts where prohibits

*Refrain from using generated arts where prohibits

Latent diffuser models gain a lot of traction in generative AI for their efficiency, content diversity, and reasonable footprint. This work presents Latent Painter, which uses the latent as the canvas, and the diffuser predictions as the plan, to generate painting animation. In addition to composing the final output from blank, Latent Painter can transit one image to another, providing additional options to the existing interpolation-based method. Moreover, it can transit the generated images from two different sets of checkpoints.

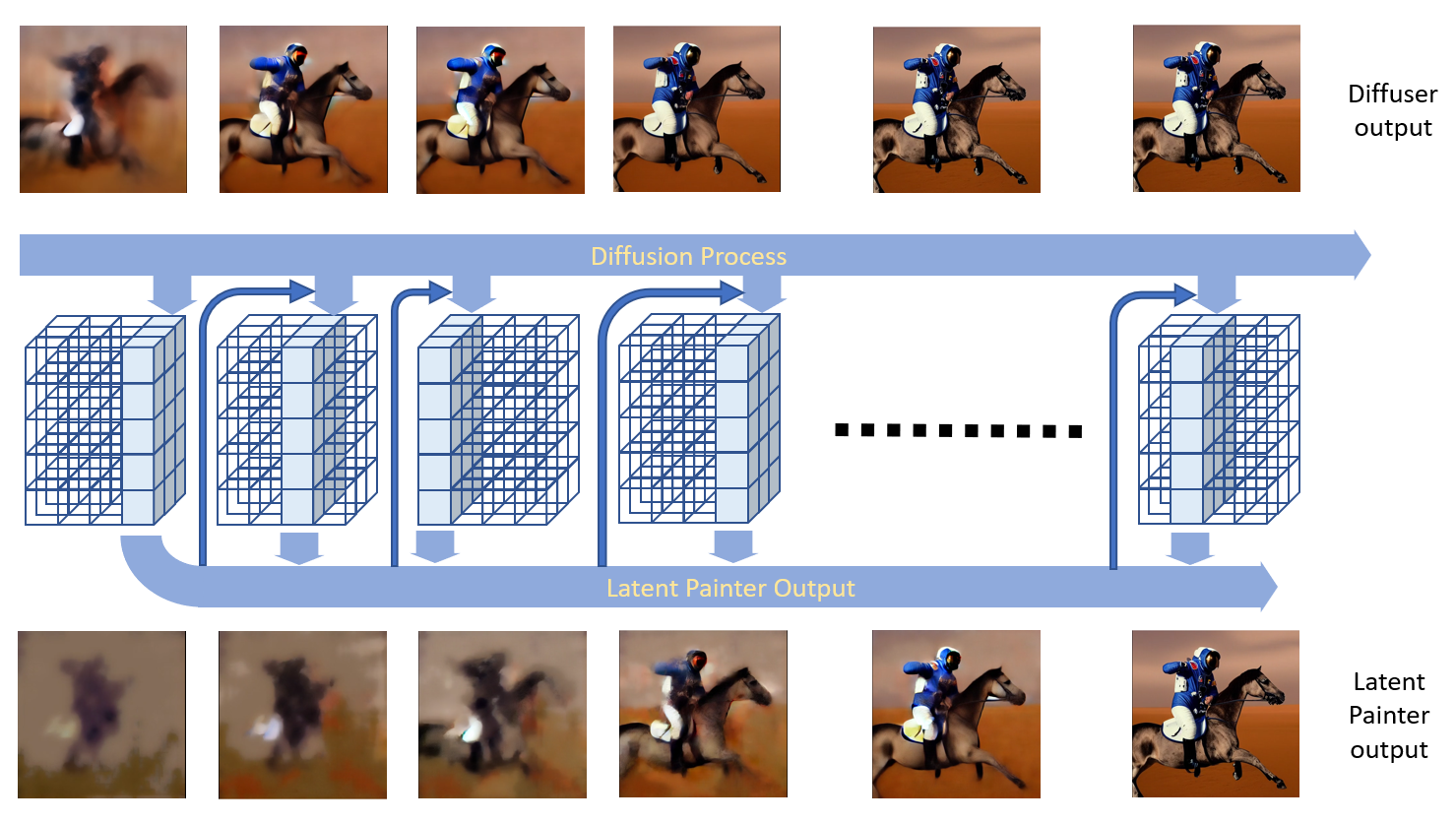

The latent diffuser predicts better and better final outputs along denoising process, which can be used as animation frames. However, the majority of the update is completed during the first few frames -- making the rest of the animation stall (Vid. 1). In a different scenario, animation transits between two generated images via interpolating both the embedded prompts and seeded latents (Vid. 2). While the image-to-image animation provides high quality morphology, it also comes with occasional disruptions and nonsense.

Latent Painter schedules the painting actions on U-Net output of the latent diffuser. Among the latent channels, the channel with highest information gain is selected as the painting channel. Within the painting channel, each move motivates the painter to stroke at the location of highest information gain. Once placed, the diffuser prediction passes through the stroke location onto the canvas. However, restrictions including stroke size, allowed channels, allowed stroke count, and accumulated motivation prevent the output being updated fully as intended. The residual is rolled into the next schedule until the last, which guarantees the final state (Fig. 1).

In addition to paints, Latent Painter can also paint photos, or anything generated by latent diffusers. The denoising nature of the U-Net guides the painting process from rough to detail. The stroke placement indicates the location in the current channel needing update the most, which typically is not evenly distributed in space (Vid. 3).

Latent Painter allows non-stroking actions in between the schedules, with or without information flow constraints.

When transitioning between images generated by the latent diffuser, the information flow runs the source image denoising schedule backward, and then the destination schedule forward. Through the constraints of the flow, the transition time can be traded with the detail.

When the source and destination images share a certain part of background, such as in image editing, the interpolated latents between the source latent and the destination latent can be used as the prediction guidance. This provides crispy frames by avoiding the guidance from during denoising.

With same VAE to interpret the latent, Latent Painter can transit source image to destination images that come from two different sets of the diffuser denoising checkpoints.

The VAE in diffusion system cannot decode the all-zero latent into a white image. With proper masking on the decoded image, Latent Painter can start from a white canvas.

@misc{su2023latent,

title={Latent Painter},

author={Shih-Chieh Su},

year={2023},

eprint={2308.16490},

archivePrefix={arXiv},

primaryClass={cs.CV}

}